Difference between revisions of "Artificial Neural Networks"

| Line 15: | Line 15: | ||

==Inhibitors:== | ==Inhibitors:== | ||

1. | 1. Outcome ethical issues: Is there a danger developing technologies that might perform similar (thinking) functions as the human brain? | ||

2. Research ethical issues: Is it ethical to perform research and do experiments on the human brain and its functions? | |||

Revision as of 23:20, 30 November 2004

Description:

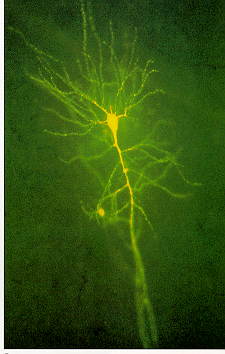

Artificial Neural Networks (ANNs) are information processing models that are inspired by the way biological nervous systems, such as the brain, process information. The models are composed of a large number of highly interconnected processing elements (neurones) working together to solve specific problems. ANNs, like people, learn by example. Contrary to conventional computers -that can only solve problems if the set of instructions or algorithms are known- ANNs are very flexible, powerfull and trainable. Conventional computers and neural networks are complementary: a large number of tasks require the combination of a learning approach and a set of instructions. Mostly, the conventional computer is used to supervise the neural network.

For more information: http://en.wikipedia.org/wiki/Neural_network

Enablers:

1. Research & Development: Mathimaticians, Psychologists, Neurosurgeons,...

2. Applications using artificial neural networks (e.g. sales forecasting, data validation, etc from NeuroDimension)

3. Funding from international institutes such as IST

Inhibitors:

1. Outcome ethical issues: Is there a danger developing technologies that might perform similar (thinking) functions as the human brain?

2. Research ethical issues: Is it ethical to perform research and do experiments on the human brain and its functions?

Paradigms:

Experts:

Timing:

1933: the psychologist Edward Thorndike suggests that human learning consists in the strengthening of some (then unknown) property of neurons.

1943: the first artificial neuron was produced by the neurophysiologist Warren McCulloch and the logician Walter Pits.

1949: psychologist Donald Hebb suggests that a strengthening of the connections between neurons in the brain accounts for learning.

1954: the first computer simulations of small neural networks at MIT (Belmont Farley and Wesley Clark).

1958: Rosenblatt designed and developed the Perceptron, the first neuron with three layers.

1969: Minsky and Papert generalised the limitations of single layer Perceptrons to multilayered systems (e.g. the XOR function is not possible with a 2-layer Perceptron)

1986: David Rumelhart & James McClelland train a network of 920 artificial neurons to form the past tenses of English verbs (University of California at San Diego).

Web Resources:

1. http://www.doc.ic.ac.uk/~nd/surprise_96/journal/vol4/cs11/report.html